How Facebook's Algorithm Influences Political Divides and Solutions

Written on

Chapter 1: Understanding Algorithmic Influence

Facebook and Instagram have faced ongoing criticism for perpetuating misinformation and deepening political divides in the U.S. Recent groundbreaking research published on a Thursday has unveiled critical insights into the functioning of these platforms' algorithms and potential strategies to alleviate their adverse effects.

The research, appearing in prestigious journals Science and Nature, represents the first collaborative effort between Meta, the parent company of Facebook and Instagram, and 17 external researchers. They examined data from millions of platform users during the 2020 U.S. presidential election and conducted surveys with select users who opted to participate.

Section 1.1: Key Findings of the Research

One significant discovery is that liberals and conservatives consume distinct political content on Facebook, with minimal overlap. Additionally, conservatives are exposed to a greater amount of misinformation, primarily due to their reliance on less credible, more ideologically extreme sources.

Subsection 1.1.1: The Role of Algorithmic Prioritization

Photo by ?????? ????? on Unsplash

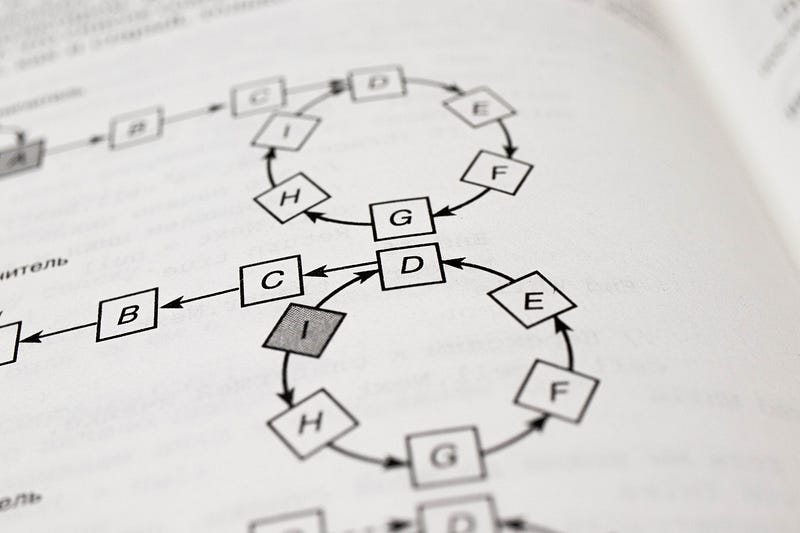

Researchers highlighted that Facebook’s algorithm, which curates content based on users' previous interactions, tends to prioritize content that is more engaging, emotional, and extreme. This can potentially exacerbate polarization and misinformation, as users are more likely to see content that aligns with their views while missing out on challenging perspectives.

Section 1.2: Potential for Algorithmic Change

The studies also indicated that adjusting the algorithm can significantly influence user experience and content visibility. For instance, when the researchers substituted the algorithm with a simple chronological feed of friends' posts, users encountered fewer posts overall, but their political views remained unchanged. When the option to share viral posts was disabled, users saw less political news and fewer posts from untrustworthy sources, again with no shift in political attitudes.

Photo by Timothy Hales Bennett on Unsplash

Chapter 2: The Bigger Picture

Despite the findings, researchers concluded that merely altering the algorithm is insufficient to curtail polarization or misinformation in the short term. They posited that other elements, such as social norms, media literacy, and civic education, might play a more crucial role in shaping political beliefs and behaviors.

“The insights from these papers provide critical insight into the black box of algorithms, giving us new information about what sort of content is prioritized and what happens if it is altered,” stated Talia Stroud from the University of Texas at Austin, who co-leads the research project.

Joshua Tucker, a politics professor at New York University and one of the researchers, noted, “The results suggest that social media platforms are not the primary cause of polarization in America. Instead, they mirror existing societal divisions.”

The studies indicate that while the design of tech platforms can influence users' exposure to misinformation and like-minded communities, America’s increasing polarization cannot be solely blamed on social media.

The findings also raise important questions regarding the accountability of Meta and similar tech companies for their algorithms and their societal impacts. As regulators and lawmakers deliberate on these matters, the call for more research and transparency is essential to inform effective policies and practices.

Relevant articles:

- New study shows just how Facebook’s algorithm shapes conservative and liberal bubbles, OPB, July 27, 2023

- New research suggests Facebook algorithm doesn’t drive polarization, Axios, July 28, 2023

- New research on Facebook shows the algorithm isn’t entirely to blame for political polarization, CNBC, July 27, 2023

- Changing Facebook’s algorithm won’t fix polarization, new study finds, Washington Post, July 27, 2023