Effective Strategies for Monitoring Data Pipelines in 2024

Written on

Chapter 1: Understanding Data Pipelines

Data pipelines serve as a crucial element in contemporary data-centric organizations, facilitating the seamless transfer of information from various source systems to target destinations, such as Data Warehouses or Data Lakehouses. For Data Engineers responsible for constructing and overseeing these pipelines, effective monitoring is paramount. Neglecting this aspect can lead to data loss or inaccuracies.

The task of monitoring these pipelines can be quite challenging, as even minor glitches or errors can significantly disrupt downstream processes. Below, I outline three key practices for effective monitoring of data pipelines.

Section 1.1: Establishing Key Performance Indicators (KPIs)

The initial step in monitoring data pipelines is to define Key Performance Indicators (KPIs). KPIs are quantifiable metrics that reflect the performance of a pipeline. These indicators should align closely with the pipeline's objectives, offering a transparent view of its operational health.

Key KPIs for data pipelines may include:

- Data Volume: This KPI measures the volume of data traversing the pipeline. A decrease in expected levels may signify issues with the pipeline or its data sources.

- Latency: Latency indicates the time taken for data to flow through the pipeline. Elevated latency can signal bottlenecks or challenges within specific components.

- Error Rates: This metric tracks the frequency of errors within the pipeline, aiding in the identification and resolution of issues.

Section 1.2: Utilizing Appropriate Monitoring Tools

To avert errors and maintain data quality proactively, it's essential to consider various factors before constructing the data pipelines. A previous article I authored elaborates on critical aspects of data integration that can be referenced below:

- Best Practices for Data Engineering

- How to Set Up Stable Data Pipelines

However, establishing robust monitoring systems is indispensable. Given the complexity of data pipelines, which often include numerous components and dependencies, real-time monitoring tools play a vital role in maintaining oversight and swiftly addressing issues. Essential features of these tools should encompass:

- Alerting: The capability to send notifications when KPIs deviate from acceptable thresholds.

- Visualization: An intuitive interface that allows teams to quickly pinpoint problems.

- Historical Data: Access to historical data enables teams to identify trends and potential issues proactively.

With real-time monitoring tools, organizations can detect and rectify pipeline problems before they escalate.

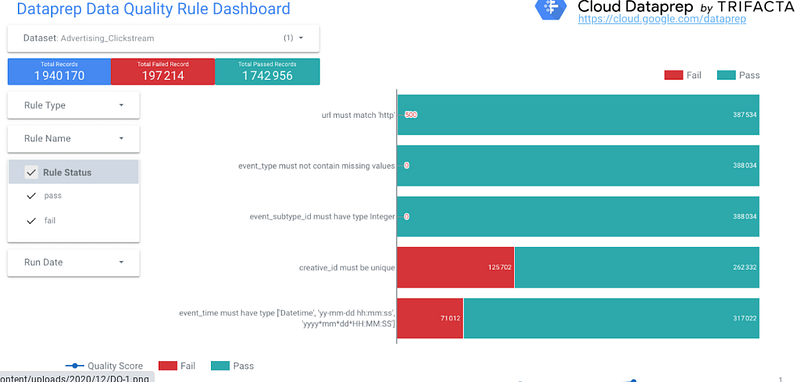

The above figure illustrates an example of what a monitoring dashboard might look like. This particular dashboard was created using tools like BigQuery, Dataprep, and Looker Studio, which I've found to be quite useful in my professional experiences. Other tools and cloud providers also offer comparable solutions.

Chapter 2: Implementing Continuous Integration and Deployment

The adoption of a Continuous Integration and Continuous Deployment (CI/CD) framework can significantly enhance the efficiency of deployment and monitoring processes. Just as it's important to follow best practices during data pipeline construction, automating the creation, testing, and deployment of code changes reduces the likelihood of introducing errors.

The advantages of this methodology include:

- Automated Testing: This feature enables rapid identification of errors, ensuring that code alterations do not lead to new issues.

- Version Control: This allows teams to monitor changes within the pipeline and easily revert to prior versions when necessary.

- Release Management: Proper release management guarantees that code modifications are appropriately deployed and configured in the production environment.

While the first two recommendations focus primarily on monitoring the data pipelines themselves, the third emphasizes overseeing the deployment process. By implementing a CI/CD framework, organizations can minimize errors, allowing teams to concentrate on maintaining and enhancing the overall health of their data pipelines.

Summary

Monitoring data pipelines is vital for their effective and efficient operation. By establishing KPIs, leveraging real-time monitoring tools, and adopting a CI/CD process, organizations can swiftly identify and resolve potential issues. These best practices empower organizations to construct robust and reliable data pipelines, driving their data-driven initiatives forward.

Data Integration Considerations

What dependencies exist with the source systems?

towardsdatascience.com

Sources and Further Readings

[1] Alteryx|Trifacta, Setting Up Data Quality Monitoring For Cloud Dataprep Pipelines (2020)

The first video titled "Best Practices for Building Data Pipelines! How to Build the Best Data Pipelines" provides insights into effective strategies for constructing efficient data pipelines.

The second video, "5 Ways To Monitor Data Pipelines - Every Engineer Needs to Know This!" outlines essential techniques for monitoring and maintaining data pipeline integrity.